Error Metrics- We have Classification Metrics and Regression Metrics

Choice of Metrics based on the type and implementation of the Model.

Classification Metrics

- Confusion Matrix

- Accuracy

- Misclassification Error

- Speicificity

- Recall

With above, there are many other metrics available to evaluate classification model.

Regression Metrics

- MSE

- RMSE

- MAE

- MAPE

Confusion Matrix

* Always build Confusion Matrix on Test Data not on Train Data. **

- It is used to describe the performance of the Model.

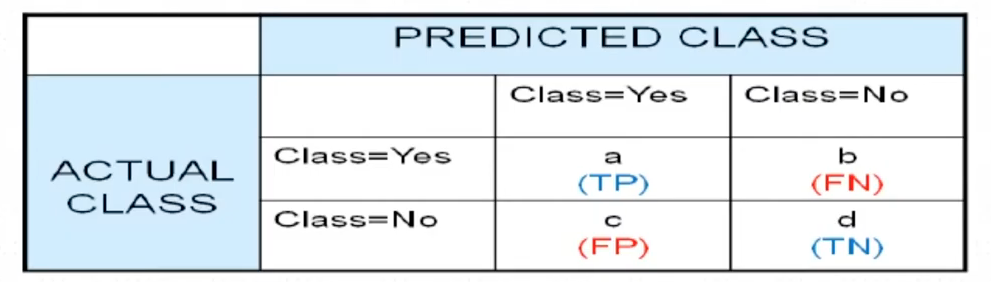

- Row represents actual values and column represents predicted values.

- It is used for binary or multi class classifier problem.

- If our Target variable has two or more classes then we can develop confusion matrix based on that.

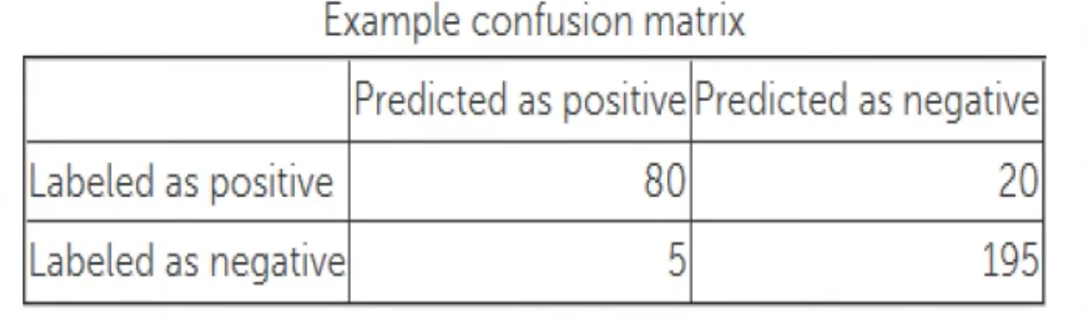

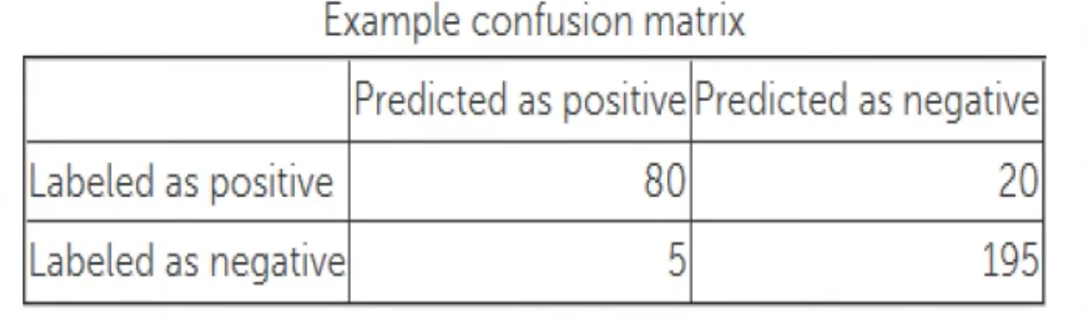

- The matrix size will be n*n where n is number of classes in Target Variable. Below is the image of confusion matrix True Positive - Actually Positive(Yes) also Predicted Positive(Yes).

True Negative- Actually Negative(No), also predicted Negative(No).

False Positive- Actually Negative(No), but predicted Positive(Yes).

False Negative- Actually Positive(Yes), but predicted Negative(No).

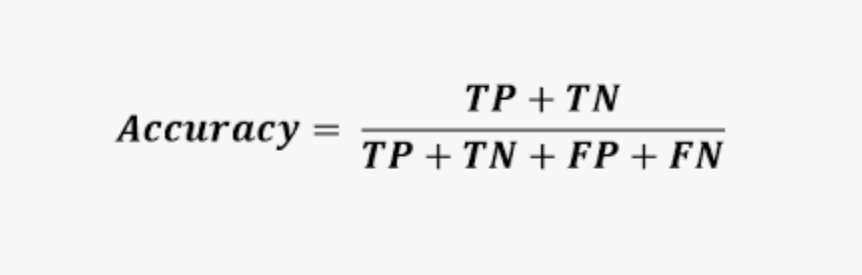

Accuracy

Accuracy is measured through confusion matrix.

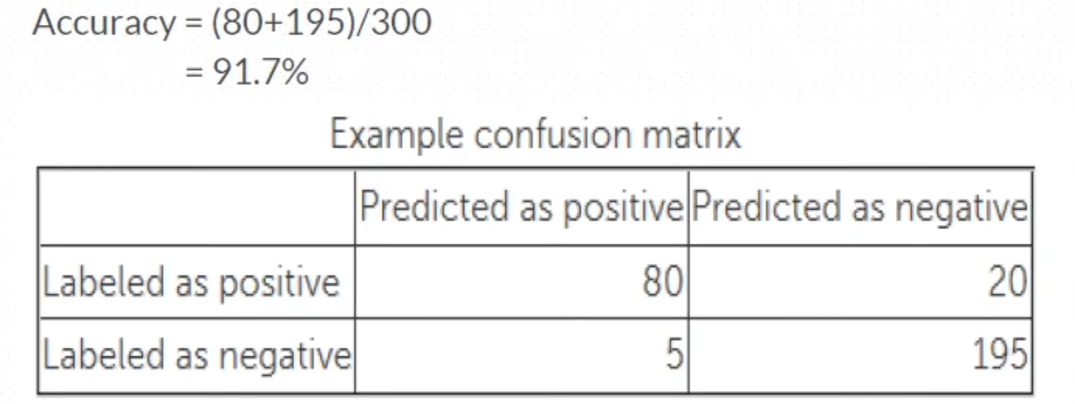

Accuracy = TP+ TN/ Total Observations (TP+TN+FP+FN)

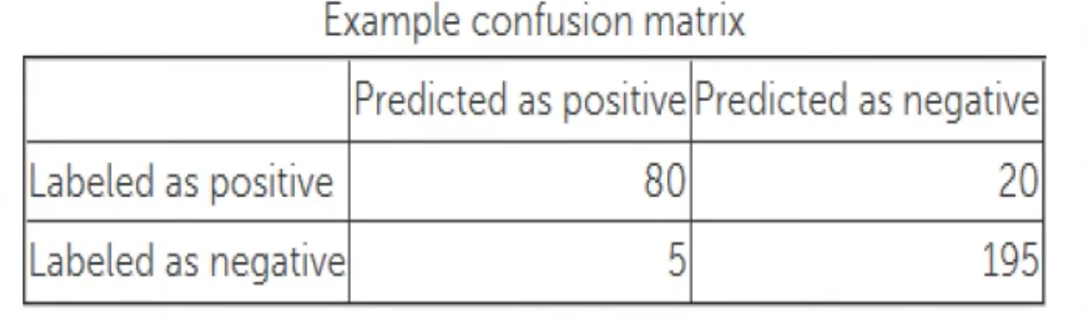

Example

Misclassification Error

Misclassification Error is nothing but the error which is classifying a record as beloging to one class when it belongs to another class. And the error rate is below which is calculated from above confusion matrix.

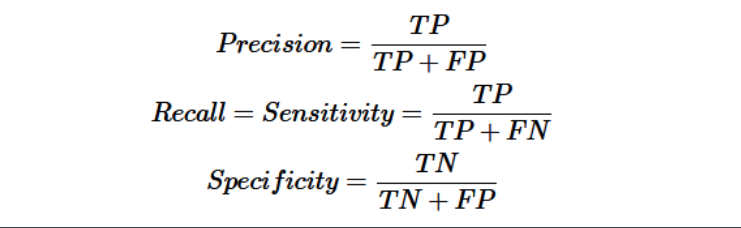

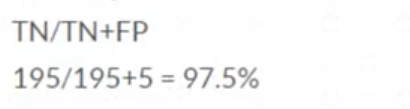

Specificity

- The proportion of actual negative cases which are correctly identified.

- Specificity = TN/TN+FP

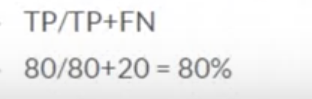

Recall

- The proportion of actual positive cases which are correctly identified.

- Recall = TP/TP+FN

Regression Metrics

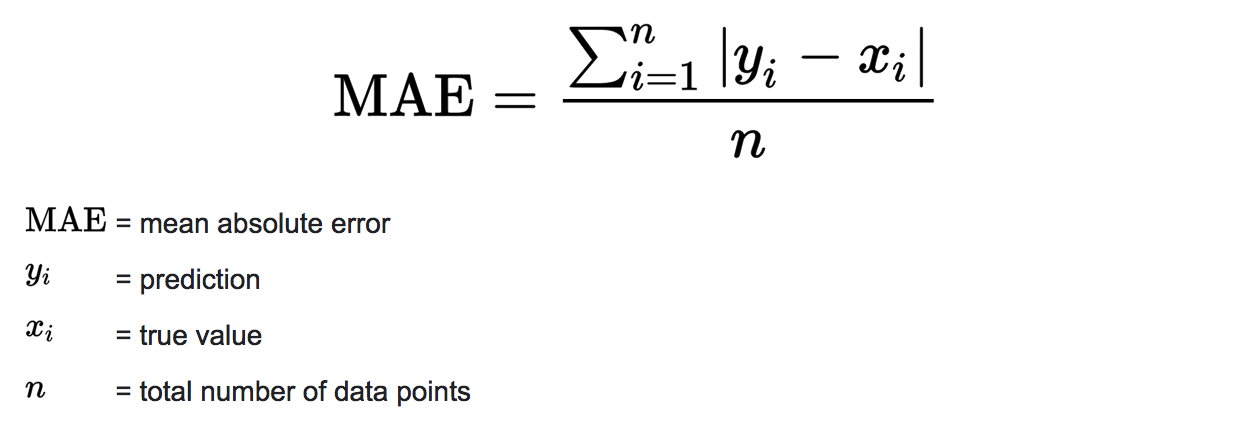

MAE

- Mean Absolute

- Average of the absolute errors

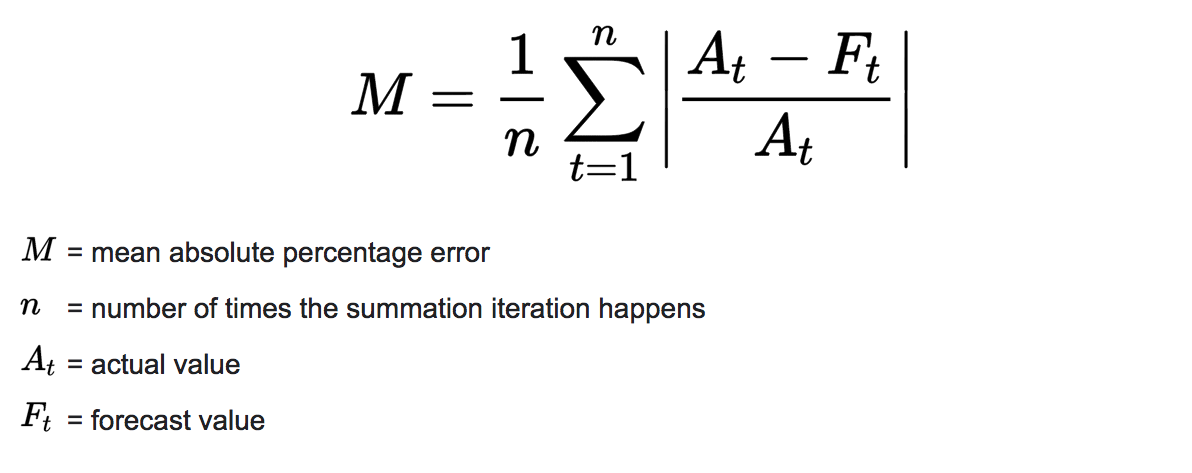

MAPE

- Mean Absolute percentage error

- Measures accuracy as a percentage of error

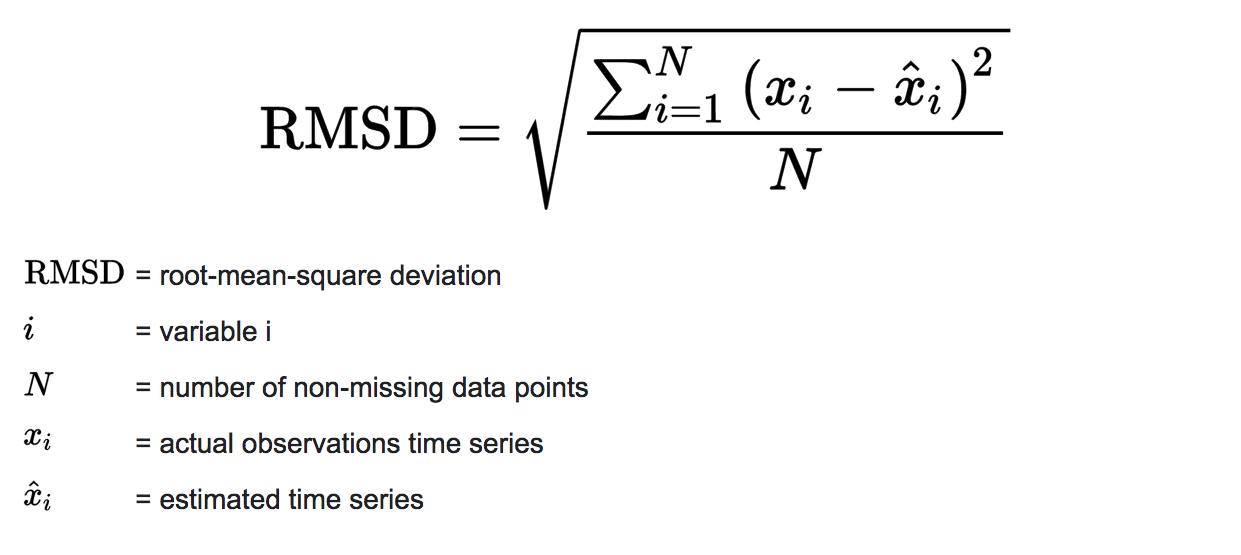

RMSE/RMSD

- Root Mean Squared Error/Deviation

- Time based Measure

In Next, we will see Error Metrics with python Implementation.